I'm a mid-level software engineer. I believe in writing beautiful code, developing accessible solutions, and giving back to the coding world.

preconnect resource hint and the crossorigin attribute

2019-4-5

Resource hints help pages load faster by telling the browser what assets it'll need in the future.

Sometimes you don't know ahead of time what assets are needed in a page. But you know they'll be hosted on a

particular domain. In that case, you can give the browser a head start with the preconnect resource hint.

<head>

<!-- other stuff -->

<link rel="preconnect" href="https://cdn.example.com/" crossorigin>

</head>

Without resource hints

Suppose a page on my site uses a stylesheet on another origin, and that stylesheet defines a custom font.

@font-face {

font-family: 'Custom Font';

src: url(https://mac9416.com/demo/preconnect/font.ttf) format('truetype');

}

body {

font-family: 'Custom Font';

}

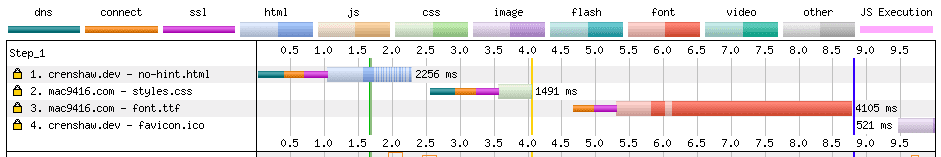

Without resource hints, my page's HTML must be parsed before the stylesheet can be downloaded.

(In this example, I've moved the stylesheet link below a massive block of "lorem ipsum" text, to simulate a late- discovered stylesheet and make the waterfall chart easier to read.)

In the above WebPageTest waterfall chart, the page is completely downloaded and parsed before Chrome connects to mac9416.com to download the stylesheet.

preconnect with crossorigin

The asset will load faster if I add a preconnect hint for mac9416.com. (Remember, we're pretending we don't know

specifically which assets will be downloaded from mac9416.com. Otherwise we would add preload hints for an even

greater performance boost.)

<head>

<!-- other stuff -->

<link rel="preconnect" href="https://mac9416.com/" crossorigin>

</head>

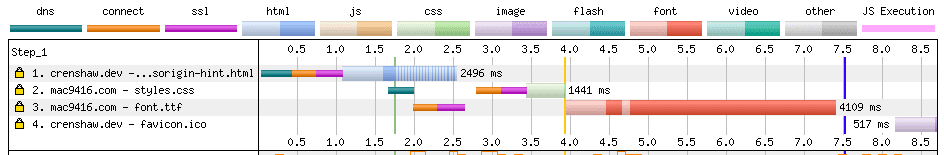

With a preconnect hint in place, the waterfall

looks better. A DNS lookup for mac9416.com happens immediately after

the first chunk of HTML is downloaded. And the connection used to download my custom font happens immediately after

the DNS lookup is finished. But it looks like there's another connection to mac9416.com that's initiated after HTML

is downloaded and parsed. That second connection is used to download the custom font.

preconnect without crossorigin

In the above code sample, I naiively copied an example and left the crossorigin attribute in place. I just assumed it meant "this connection is to a different domain" -- which it is. Let's see what happens when I remove that attribute.

<head>

<!-- other stuff -->

<link rel="preconnect" href="https://mac9416.com/">

</head>

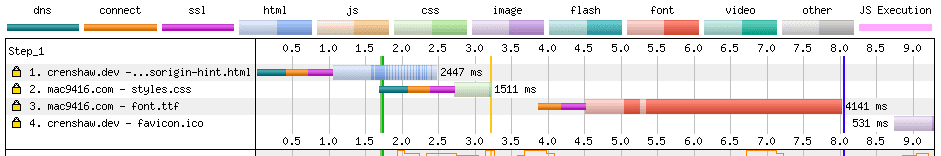

In this waterfall chart, we seem to have the same problem, but with the connections swapped. The connection used to download styles happens immediately, but the connection used to download fonts begins after the stylesheet is downloaded and parsed.

Why did crossorigin break things?

What's going on? I incorrectly assumed crossorigin simply meant "the target is on another domain." But the browser

could infer that by comparing the <link> element's href attribute to the current page's origin. So what is the

crossorigin attribute for?

crossorigin actually tells the browser that "resources on this connection are downloaded using CORS."

By default, it specifically means "CORS without credentials."

CORS improves web security. That's all I'll say about it here, because smarter people have explained it elsewhere much better than I could.

To speed up this web page, all we need to know is that resources downloaded without CORS are downloaded on a separate connection from those that use CORS.

A quick glance at a list of requests that use CORS shows that our font request will use CORS, but the stylesheet request will not.

preconnect with crossorigin and without

So let's use two preconnect hints, one for non-CORS requests, and the other for CORS requests.

<head>

<!-- other stuff -->

<link rel="preconnect" href="https://mac9416.com/">

<link rel="preconnect" href="https://mac9416.com/" crossorigin>

</head>

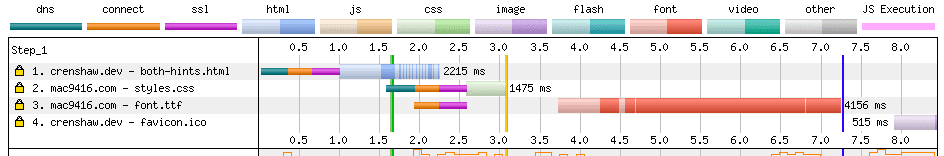

This waterfall chart looks much better. With two resource hints in place, both connections to mac9416.com are established immediately after the first chunk of HTML is parsed. Incidentally, we've achieved the fastest time to document complete (the blue line in the waterfall chart) of all the tests.

Just for good measure, I'll add a dns-prefetch hint for browsers that don't support the preconnect hint.

<head>

<!-- other stuff -->

<link rel="dns-prefetch" href="https://mac9416.com/">

<link rel="preconnect" href="https://mac9416.com/">

<link rel="preconnect" href="https://mac9416.com/" crossorigin>

</head>

There's no need for a crossorigin attribute, since DNS queries are performed without CORS.

For this experiment I've ignored crossorigin="use-credentials". I suspect CORS requests with credentials would

require a third hint.

Summary

The crossorigin attribute, when used with rel="preconnect", doesn't describe where the target origin is but rather

what kind of assets will be downloaded from that origin. If the assets use CORS, crossorigin is needed. If CORS won't

be used, crossorigin should be omitted. If both types of assets will be present, two resource hints are necessary.

If you've found resource hints and crossorigin confusing, don't feel bad. There's a lot going on. If you find this

guide confusing, please contact me! The fault is probably mine, and I'll be happy to clarify.

Jumping to conclusions

2018-12-30

Keryx is an offline package manager for Debian-based operating systems.

On my previous blog, I wrote "Reviving Keryx" announcing my intention to refactor and bring the project back to life.

But then life happened. I haven't pulled up the Keryx source code in a long time.

Keryx provided a huge service for the Linux community, impacting people around the world. I wish I were in a position to continue development.

If you've landed on this page and need something like Keryx in your life, please hit me up @m4c9416 on Twitter and let me know. If there's enough continued interest, I might carve out enough time to update the code base.

Reviving Keryx

2018-12-30

Jumping out of the dark and shouting "BOO!" at a friend is fun. Why? Because they start, maybe scream, generally act scared. Then of course, they realize that instead of a menacing ghost it's a good friend, and you both enjoy a good laugh.

Humans jump to conclusions, and they do it quickly.

If you're a fan of evolutionary biology, you might believe the tendency to jump to conclusions emerged out of a higher survival rate for those who did. If your brain says "I think I see a bear," its time is better spent calculating escape routes than contemplating, "Well what if it's just a funny-looking tree?"

If you believe god(s) made the human mind pretty much the way it is, it's reasonable to suspect she/he/it/they designed the brain to think fast and therefore live longer and more happily.

As web user experience designers, we tend to think of "experiences" as activities such as "switching tabs" or "clicking the play button."

But some experiences are moments rather than activities. Your mind sees an image and, by nature, must draw a conclusion.

If the image presented by a user interface is likely to be interpreted in a negative way ("This interface is ugly/confusing/irritating."), it doesn't matter if 500 milliseconds later everything looks great. Your interface yelled "BOO!", your user is scared, and the rest of the experience is contaminated by that negative feeling.

In some later post I'll argue that progressive JPEGs (images that initially load low-quality but then improve) are perceived with more negative emotions than baseline JPEGs (images that load top-to-bottom) because of the conclusions to which the user jumps in the first few milliseconds of the loading process.

For now I'll simply assert: user experience must be designed with an eye toward moments (even ones that last mere milliseconds) because of the human need to jump to conclusions.

Developing from my phone

2018-12-29

I installed an FTP client and text editor on my phone. Writing HTML is a bit unwieldy with the mobile keyboard, but it works.

Once I'm back to my keyboard, I'll attempt to make individual "pages" out of my blog posts, using the history API.

I'm thinking all requests will have to be served index.html via server-side rewrites and particular posts shown according to the URL by JavaScript. I'm still not sure how to handle 404 errors.

Update: I'm back at my desktop and wouldn't want to develop from a mobile device for extended periods of time.

Service worker struggles

2018-12-25

I like Chrome's Lighthouse tool. So much so that I set up a service worker for this site, just so I could earn 100 scores across the board.

I used a boilerplate service worker. But didn't really know how it works.

So now the worker is caching index.html, and I'm not sure how to bust the cache. I incremended the precache version, but no dice.

Oh well, I'm learning.

Update: the boilerplate has a runtime cache which caches everything. Busting the precache won't update index.html. So I removed the runtime cache logic, and now the page updates when I increment the precache version.

New website, day 2

2018-12-23

I broke down and added styles. Sorry it's so monotone. I'm not a designer, and I can sense when I'm out of my depth.

I also put this site on a free Cloudflare account. It's easy, so why not.

There's something therapeutic about writing plain old HTML.

New website

2018-12-22

I got an email today that my site had malware. It was a Wordpress site that I didn't really maintain, so I guess I shouldn't be surprised.

So I said "forget this" and deleted my blog.

I've been meaning to rewrite it for a long time. But each time I started, I'd think "I should really version control this. And then automatically deploy on a commit. But to a staging environment first, then to production. Should the staging environment be behind a login? Also I'll need to bundle styles and scripts and minify everything and serve it from a CDN."

I'm exhausted just from typing it. So I'm writing up this page in gedit, and I'll upload it as index.html in FileZilla. Why? Because those tools are the ones I have handy. Maybe this site will some day be more complex. But for now? It's easy.